Managing model inventory

Register and track all AI models across your organization.

Overview

A model inventory is a comprehensive catalog of all AI models and systems used within your organization. Just as financial assets require tracking for accounting and compliance, AI models require similar oversight to ensure proper governance, risk management, and regulatory compliance.

Without a centralized inventory, organizations often lose track of which AI models are in use, who is responsible for them, and what data they process. This lack of visibility creates compliance risks, security blind spots, and operational inefficiencies. A well-maintained inventory answers fundamental questions: What AI do we have? Where is it deployed? Who owns it? What risks does it present?

Why maintain a model inventory?

- Regulatory compliance: The EU AI Act and other regulations require organizations to maintain records of AI systems, especially high-risk applications

- Risk visibility: You cannot manage risks you do not know exist. An inventory surfaces all AI systems for risk assessment

- Accountability: Clear ownership ensures someone is responsible for each model's performance, compliance, and maintenance

- Audit readiness: When auditors or regulators ask about your AI use, you can provide immediate, accurate answers

- Resource planning: Understanding your AI landscape helps allocate governance resources where they matter most

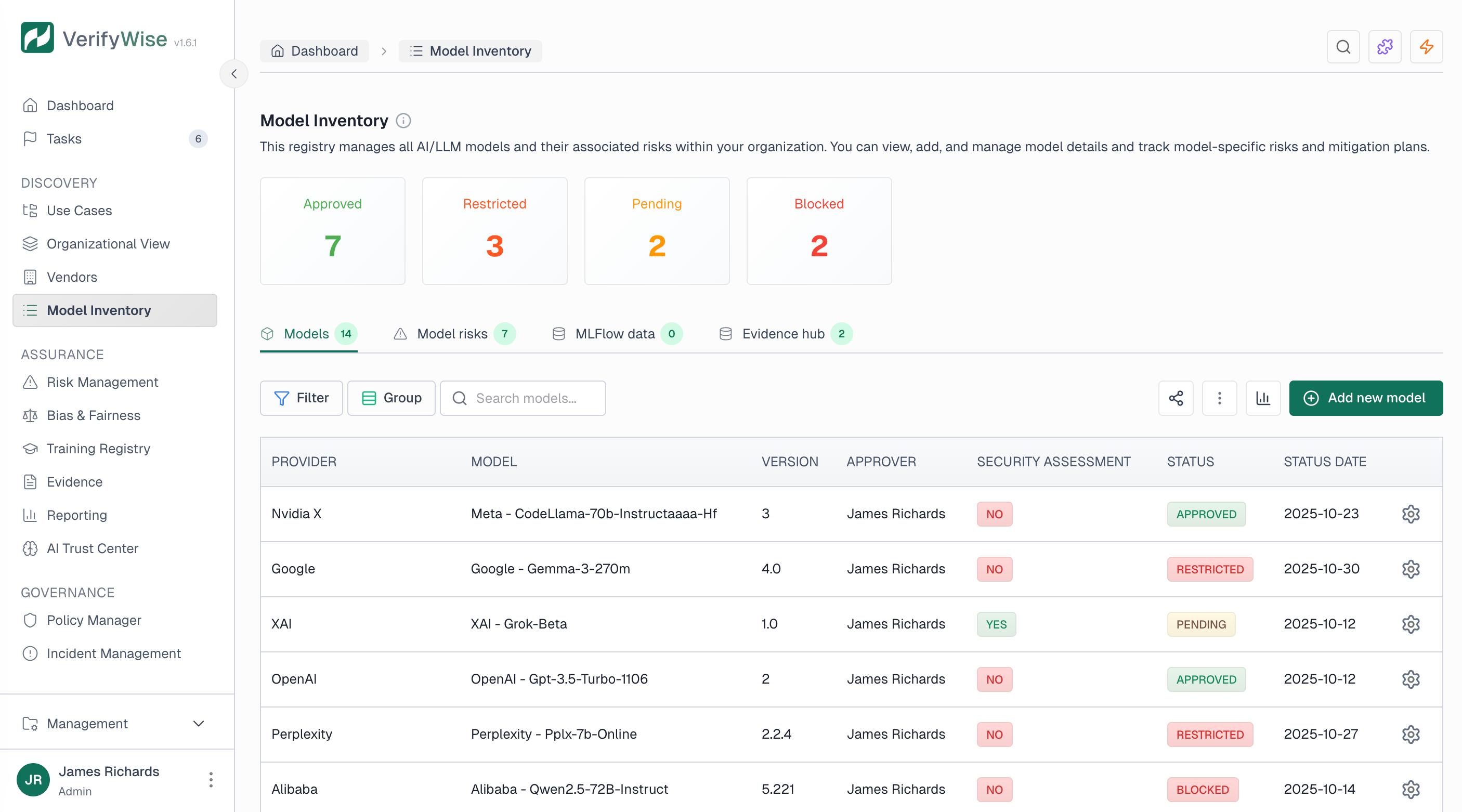

Accessing the model inventory

Navigate to Model inventory from the main sidebar. The inventory displays all registered models in a searchable table with filtering options for status, provider, and other attributes.

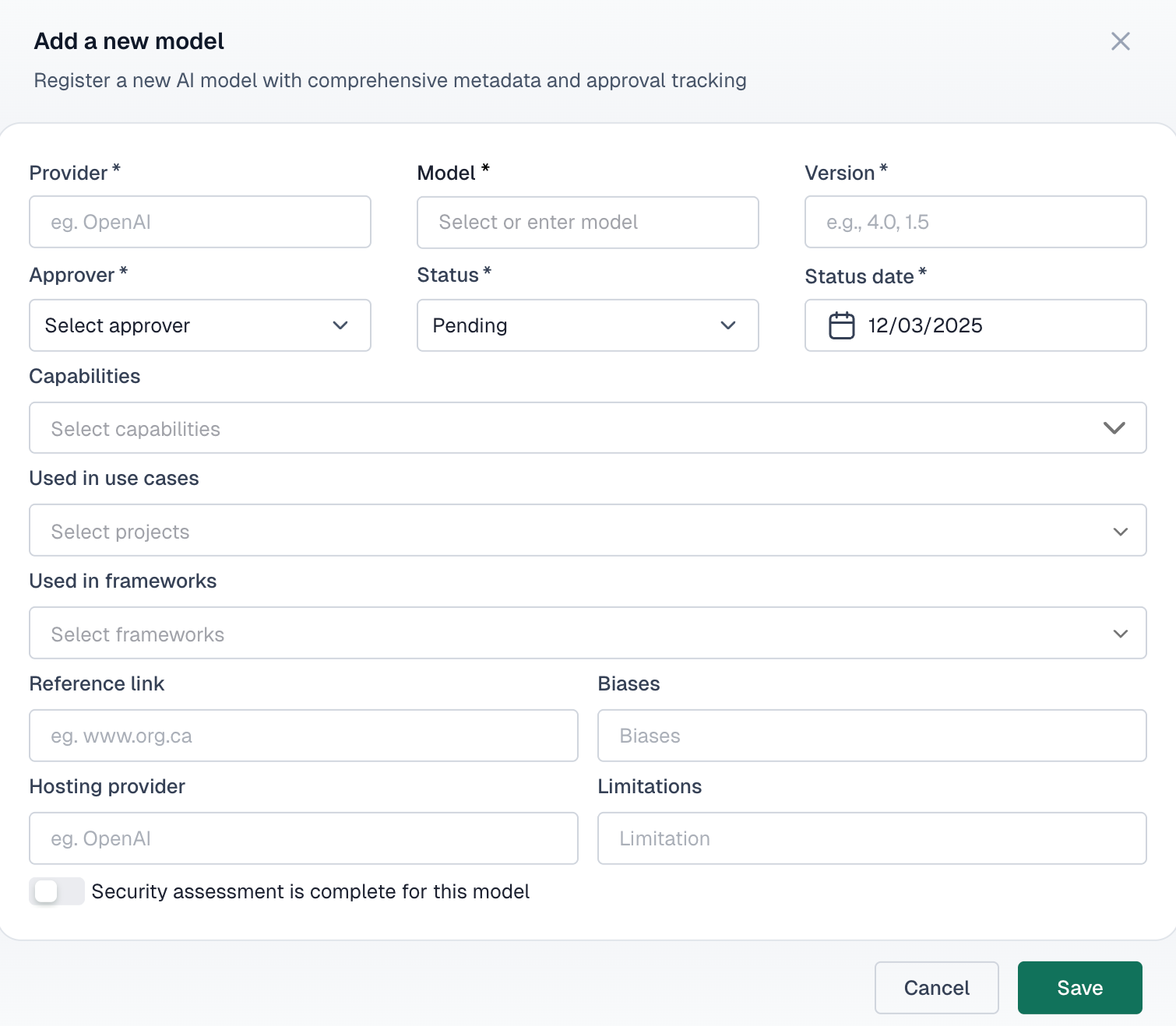

Registering a new model

To add a new AI model to your inventory, click the Add model button and provide the required information:

- Provider: — The organization or service that provides the model (e.g., OpenAI, Anthropic, internal team)

- Model name: — The specific model identifier (e.g., GPT-4, Claude 3, custom-classifier-v2)

- Version: — The version number or release identifier

- Approver: — The person responsible for approving this model for use

Model attributes

Each model in your inventory can include detailed attributes to support governance and risk assessment:

Capabilities

Document what the model can do — text generation, classification, image analysis, etc.

Known biases

Record any identified biases or fairness concerns with the model

Limitations

Document constraints and scenarios where the model should not be used

Hosting provider

Where the model is hosted — cloud provider, on-premises, or hybrid

Approval status

Every model in the inventory has an approval status that controls whether it can be used in your organization:

- Pending: Model is awaiting review and approval before use

- Approved: Model has been reviewed and authorized for production use

- Restricted: Model is approved for limited use cases or specific projects only

- Blocked: Model is not authorized for use in the organization

Security assessment

Models can be flagged as having completed a security assessment. When enabled, you can attach security assessment documentation directly to the model record for easy reference during audits.

Linking evidence

The model inventory integrates with the Evidence Hub, allowing you to link supporting documentation to each model:

- Model cards and technical documentation

- Vendor contracts and data processing agreements

- Security assessment reports

- Bias testing results and fairness evaluations

- Performance benchmarks and validation studies

MLFlow integration

For organizations using MLFlow for ML operations, VerifyWise can import model training data directly. This provides visibility into model development metrics including training timestamps, parameters, and lifecycle stages.

Change history

VerifyWise automatically maintains a complete audit trail for every model in your inventory. Each change records:

- The field that was modified

- Previous and new values

- Who made the change

- When the change occurred

This history is essential for demonstrating governance practices during compliance audits and regulatory reviews.