EU AI Act vs ISO 42001: Key Differences for AI Governance Strategy

With the global AI governance market projected to grow from $227.65 million in 2024 to $4.3 billion by 2033, and 78% of organizations now deploying AI systems, understanding the differences between the EU AI Act and ISO 42001 is business-critical.

This guide covers the fundamental differences between these frameworks, their overlap, and how to leverage both for competitive advantage.

The Challenge: Two Different AI Governance Approaches

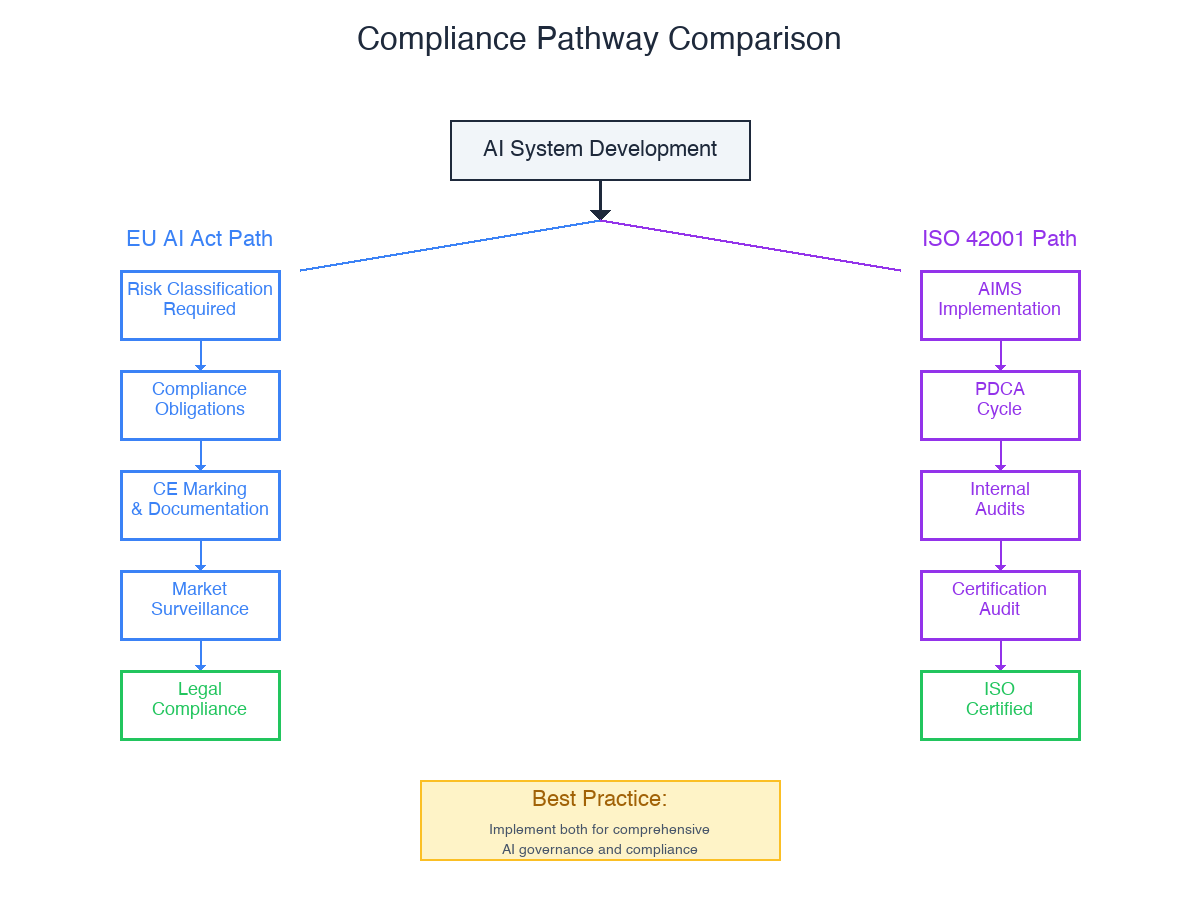

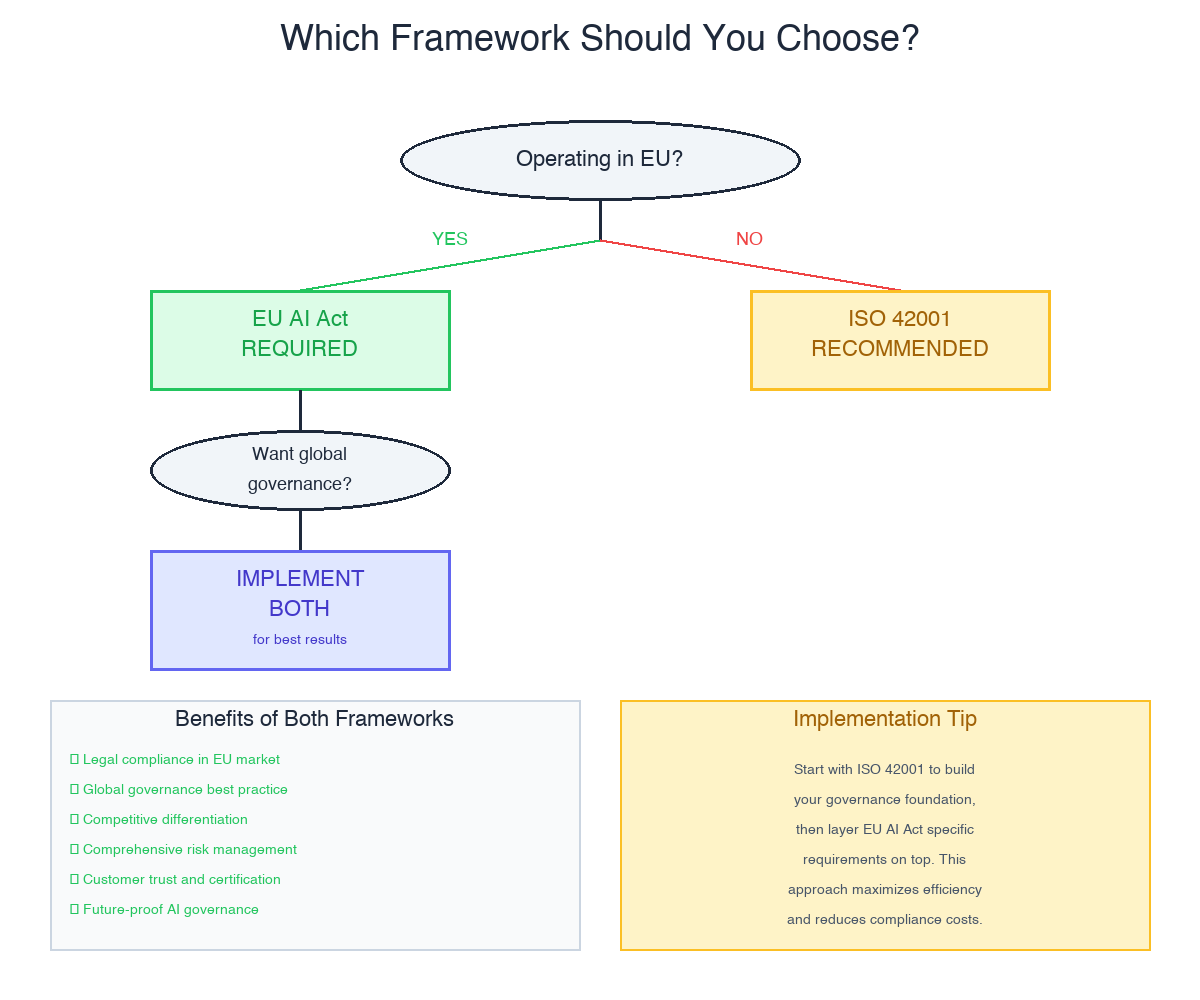

Organizations face a choice: comply with the legally binding EU AI Act, pursue voluntary ISO 42001 certification, or manage both. The EU AI Act, enforced since February 2, 2025, is the world's first comprehensive AI legal framework. ISO/IEC 42001, published in 2023, is the first international AI management systems standard.

Companies operating in the EU must comply with the AI Act. Many also recognize ISO 42001 certification's value for demonstrating responsible AI globally. Understanding which framework serves your needs—and how they work together—is essential.

Understanding the EU AI Act

The EU AI Act is binding legislation with extraterritorial reach affecting any organization deploying AI in the European market.

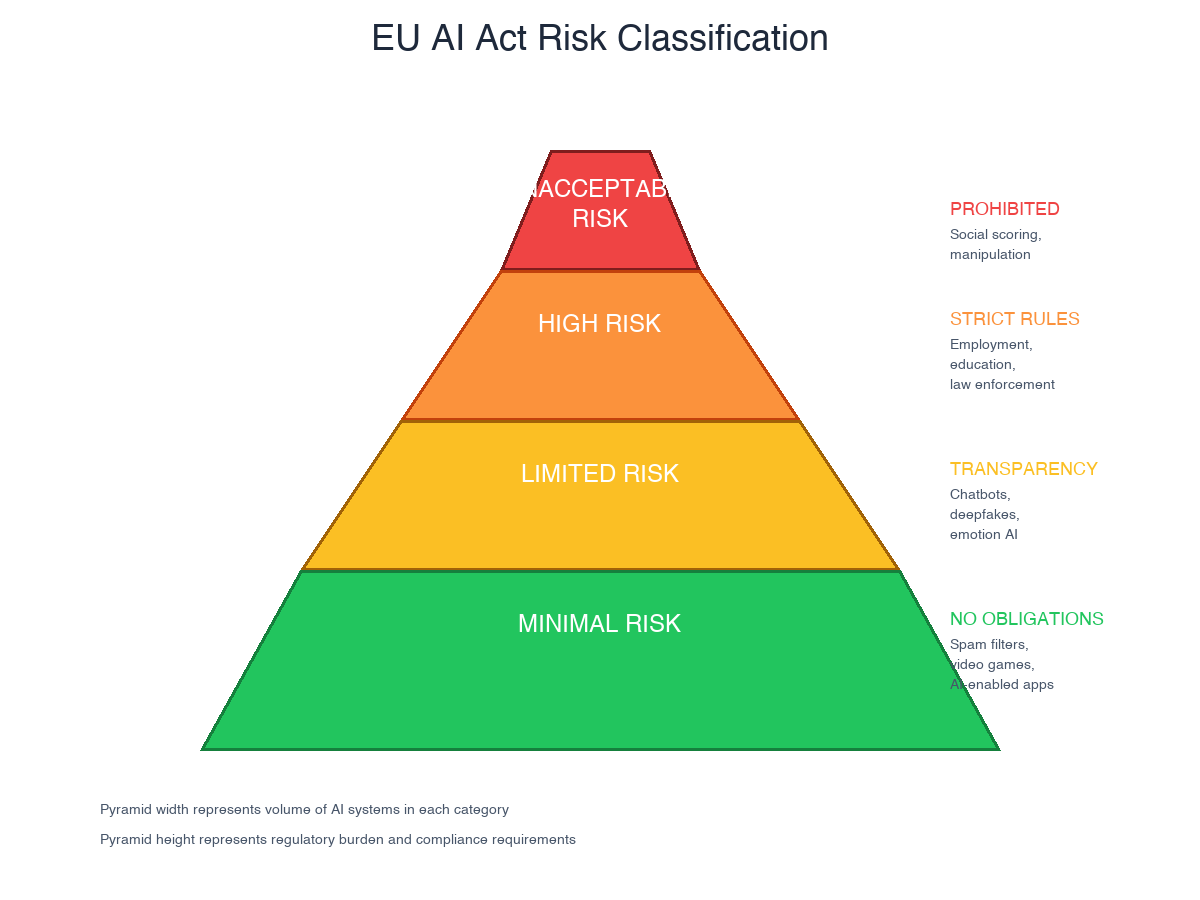

Risk-Based Classification

The Act categorizes AI systems into four tiers: unacceptable risk (prohibited), high risk, limited risk, and minimal risk.

Prohibited AI practices include systems manipulating human behavior, government social scoring, and real-time biometric identification in public spaces (with narrow exceptions). Violations can trigger fines up to €35 million or 7% of global turnover.

High-risk AI systems in employment, education, law enforcement, or critical infrastructure face conformity assessments, technical documentation, risk management systems, and ongoing monitoring.

Implementation Timeline

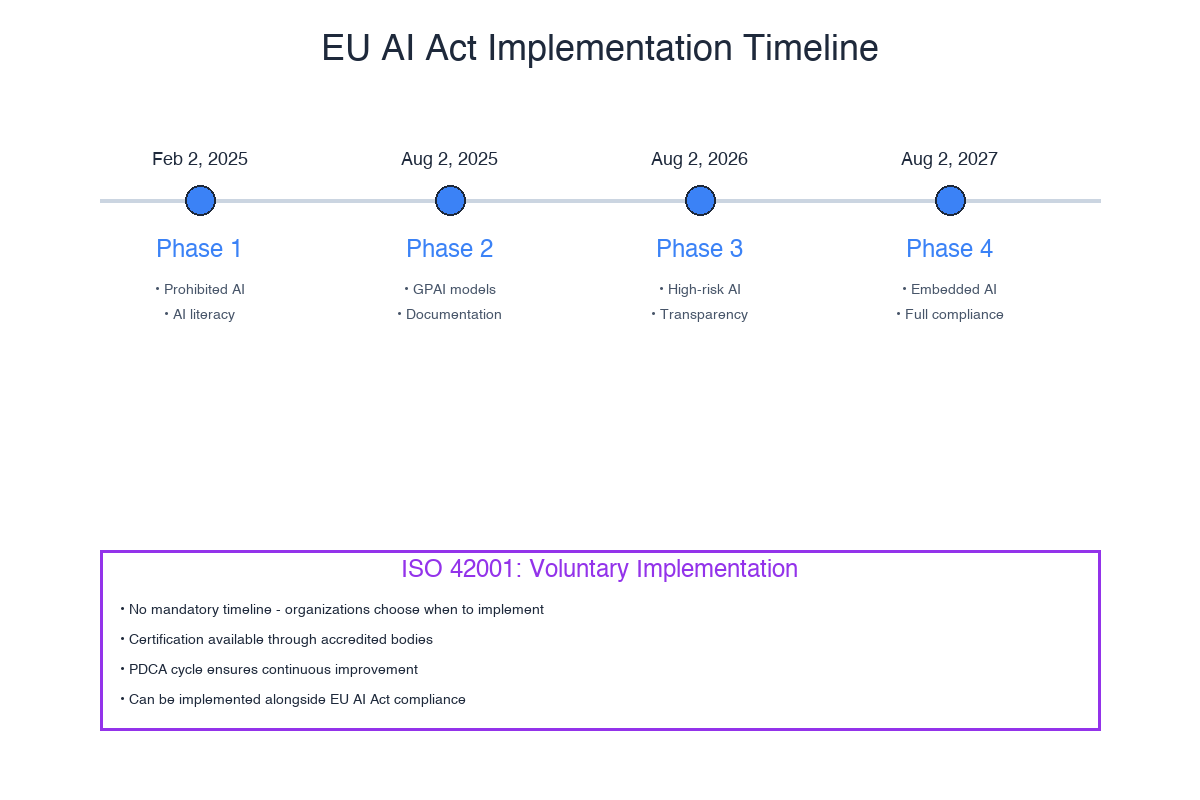

- February 2, 2025: Prohibited practices and AI literacy requirements in effect

- August 2, 2025: GPAI model providers must maintain technical documentation and comply with EU copyright law

- August 2, 2026: High-risk AI obligations become fully applicable

- August 2, 2027: Extended deadline for high-risk AI in regulated products

For more details, see our guide on understanding the EU AI Act implications and compliance.

Understanding ISO 42001

ISO/IEC 42001 is a voluntary international standard for AI management systems (AIMS). It follows the established ISO management system structure used in ISO 9001 (quality), ISO 27001 (information security), and ISO 14001 (environmental management).

The standard uses the Plan-Do-Check-Act cycle for continuous improvement. It structures requirements across ten clauses covering organizational context, leadership, planning, support, operation, performance evaluation, and improvement. Annex A contains 39 AI controls addressing data governance, transparency, human oversight, and accountability.

Unlike the EU AI Act's prescriptive requirements, ISO 42001 allows organizations to tailor controls to their context. Certification comes through third-party audits but remains voluntary—no legal penalties for non-compliance.

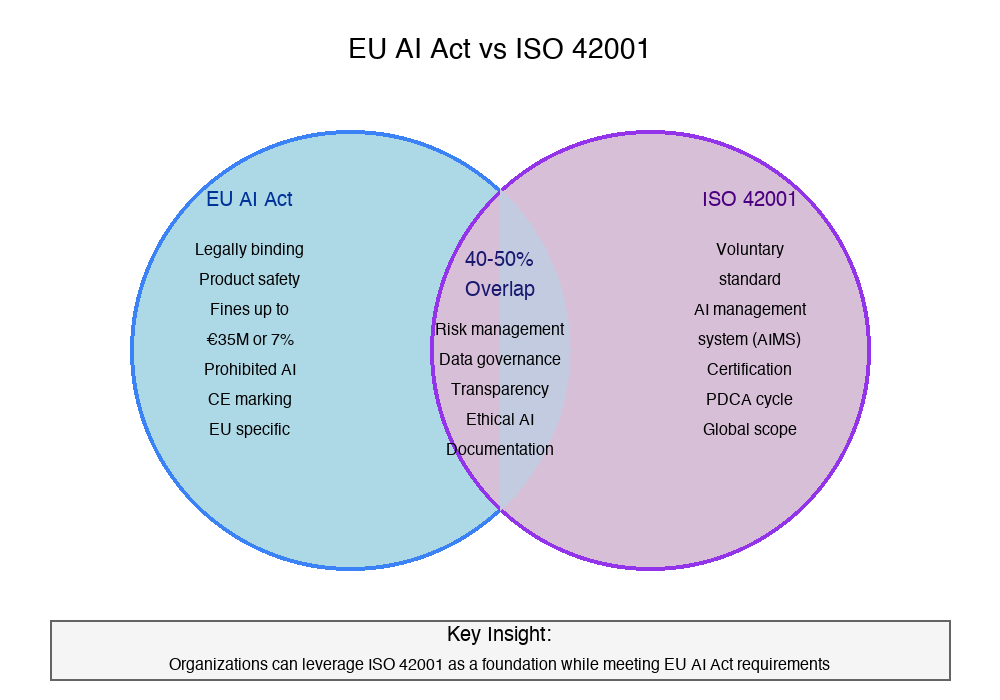

The 40-50% Overlap: Where Frameworks Align

Research suggests approximately 40-50% overlap in high-level requirements around risk management, data governance, transparency, and ethics.

Risk Management: Both emphasize risk-based approaches. The EU AI Act's four-tier classification parallels ISO 42001's requirement to identify, assess, and treat AI-specific risks.

Data Governance: Article 10 of the EU AI Act prescribes detailed data governance for high-risk systems. ISO 42001 addresses similar themes through data management controls.

Documentation: Both demand substantial documentation. Technical descriptions for ISO 42001 audits can be adapted for EU AI Act requirements.

Ethics: Both embed ethical considerations. The EU AI Act's fundamental rights impact assessments parallel ISO 42001's requirement to consider fairness, non-discrimination, and human dignity.

For organizations serious about AI governance frameworks and best practices, recognizing overlaps enables efficient resource allocation.

Critical Differences

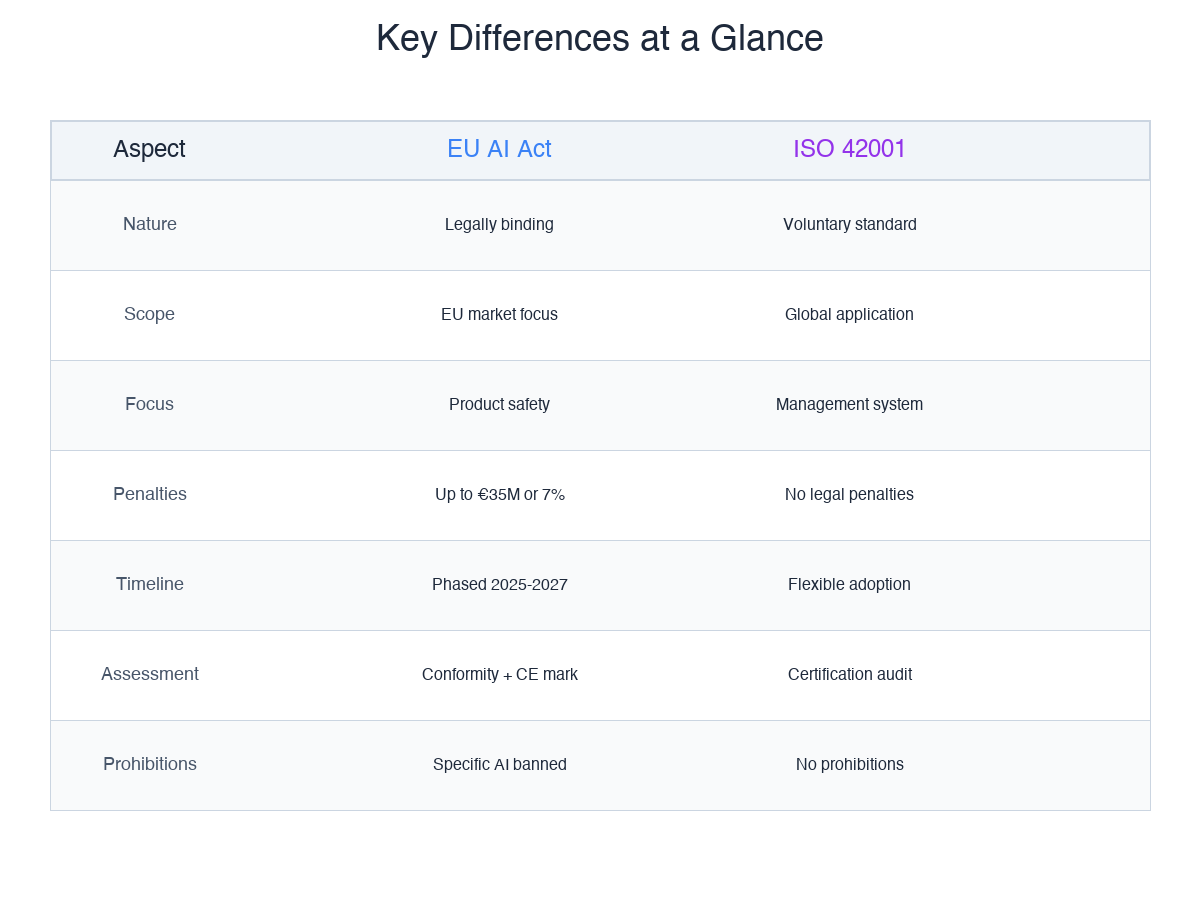

Legal Force vs. Voluntary Adoption

The EU AI Act is binding legislation with severe penalties: up to €35 million or 7% of turnover for prohibited practices, €15 million or 3% for other infringements. ISO 42001 remains voluntary—no legal penalties for non-compliance.

Geographic Scope and Focus

The EU AI Act applies to AI systems on the EU market or whose outputs are used in the EU, regardless of provider location. ISO 42001 applies globally without geographic restrictions.

The EU AI Act focuses on product safety—AI systems must meet requirements before market placement. ISO 42001 centers on organizational management systems throughout development, deployment, and operation.

Specificity and Prohibitions

The EU AI Act prescribes specific requirements: logs kept for at least six months, specific documentation content, particular conformity assessment procedures. ISO 42001 provides principle-based guidance allowing tailored implementations.

Most significantly, the EU AI Act prohibits certain AI applications: social scoring, manipulative AI, most real-time biometric identification. ISO 42001 prohibits nothing—it requires organizations to determine what laws prohibit.

Creating a Comprehensive Strategy

Ready to Take Control of Your AI Governance?

VerifyWise helps organizations navigate both EU AI Act compliance and ISO 42001 certification with an integrated governance platform.

Start Governing Your AI Systems →

The Integrated Governance Model

Rather than treating frameworks as separate exercises, implement integrated governance leveraging synergies:

Step 1: Catalog all AI systems your organization develops, deploys, or uses

Step 2: Conduct risk assessments satisfying both frameworks—classify under EU AI Act categories while performing ISO 42001's broader risk analysis

Step 3: Develop documentation templates capturing both frameworks' requirements

Step 4: Implement overlapping controls first, then layer framework-specific requirements

Step 5: Schedule assessments strategically—preparation for one strengthens the other

When to Prioritize Each Framework

Prioritize EU AI Act when:

- Operating primarily in the European market

- Developing high-risk AI systems

- Facing imminent compliance deadlines

- Regulatory penalties pose significant risks

Prioritize ISO 42001 when:

- Operating globally across multiple jurisdictions

- Building organizational AI governance capabilities

- Seeking competitive differentiation

- Customers require governance maturity demonstration

Best Practices and Pitfalls

Best Practices

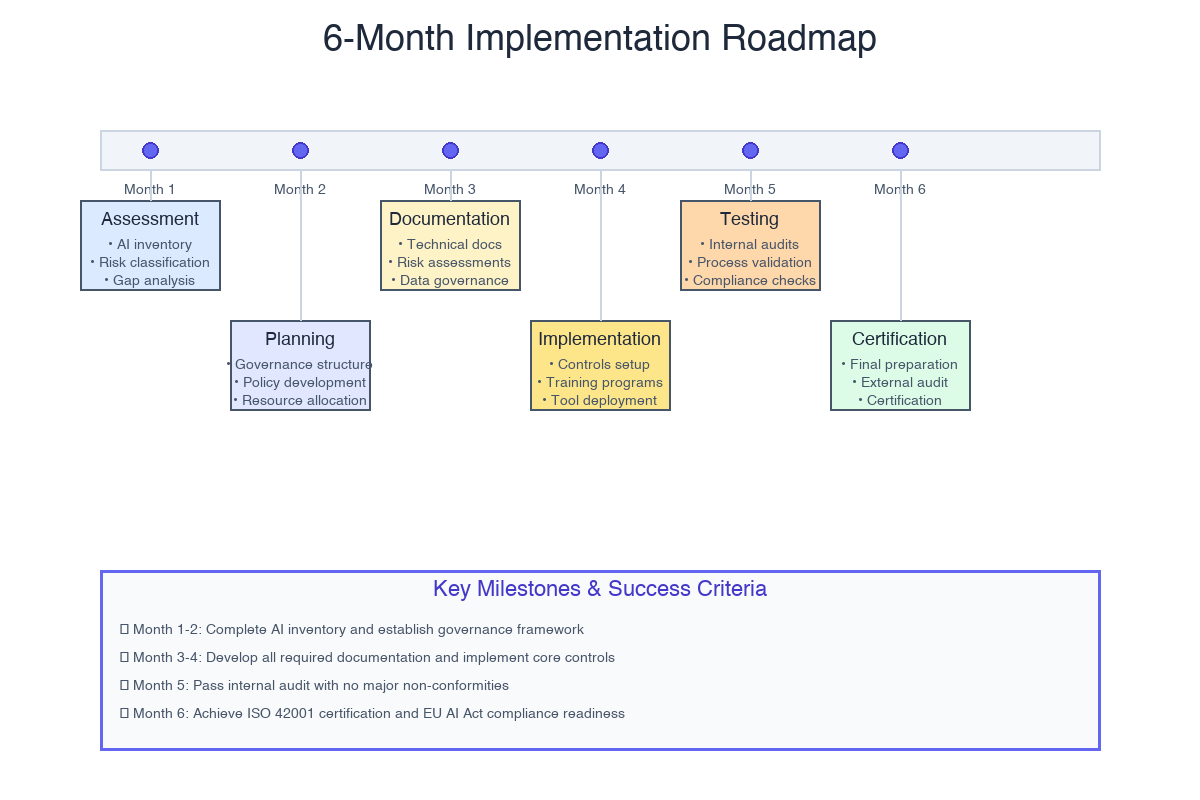

- Start early: Implementation takes longer than expected

- Invest in training: Both frameworks require organizational AI understanding

- Leverage existing systems: ISO 27001 or ISO 9001 certifications provide foundations

- Document systematically: Both frameworks demand extensive documentation

Pitfalls to Avoid

- Treating ISO 42001 as EU AI Act compliance: Overlap exists but certification alone doesn't ensure compliance

- Underestimating documentation: Starting late creates retrofitting challenges

- Neglecting third-party AI: Obligations apply to deployed third-party systems

- Overlooking continuous monitoring: Both frameworks emphasize ongoing assessment

The Future of AI Governance

Other jurisdictions are developing EU AI Act-influenced regulations: Brazil, Canada, South Korea, Singapore. This convergence suggests ISO 42001 may become increasingly valuable as a global baseline upon which jurisdiction-specific requirements layer.

The AI governance profession is maturing rapidly. According to the IAPP's 2025 report, dedicated AI governance roles increased 156% year-over-year, with Chief AI Officer positions becoming commonplace.

Key Takeaways

- Different purposes: EU AI Act is binding legislation for product safety; ISO 42001 is voluntary for management systems

- 40-50% overlap creates efficiencies: But one framework doesn't satisfy the other completely

- Integrated approaches maximize efficiency: Don't treat these as separate exercises

- ISO 42001 can provide EU AI Act foundations: Organizations implementing ISO 42001 first often find EU AI Act compliance easier

- AI governance is continuous: Neither framework is a one-time checkpoint

Next Steps

- Conduct an AI system inventory

- Perform dual risk assessments

- Identify gaps between current practices and requirements

- Develop an integrated implementation roadmap

- Invest in AI governance capabilities—roles, training, and technology

For organizations seeking guidance navigating these frameworks, VerifyWise provides integrated solutions supporting both EU AI Act compliance and ISO 42001 certification.

Additional Resources

- AI Governance Frameworks and Best Practices

- Global Approaches to AI Governance: A Comparison

- What is a Chief AI Officer?

- Dedicated AI Governance vs In-House Solutions

Last updated November 5, 2025. Consult legal and compliance professionals for guidance specific to your organization.