Artificial intelligence is reshaping how we work, communicate, and make decisions. The pace of innovation brings potential for positive change, but also risk if not handled thoughtfully.

The choices we make now about AI development and deployment will have lasting consequences. This guide explores what responsible AI means, why it matters, and how organizations can build AI systems that earn trust.

What Does Responsible AI Mean?

Responsible AI goes beyond technical performance. It means developing systems with ethical awareness and accountability—AI with a conscience.

Responsible AI creates systems that are transparent in operation, fair in treatment, and accountable for decisions. Before deploying any AI system, ask: Who might be affected? Could it disadvantage certain groups? Can we explain its conclusions? What happens when it errs?

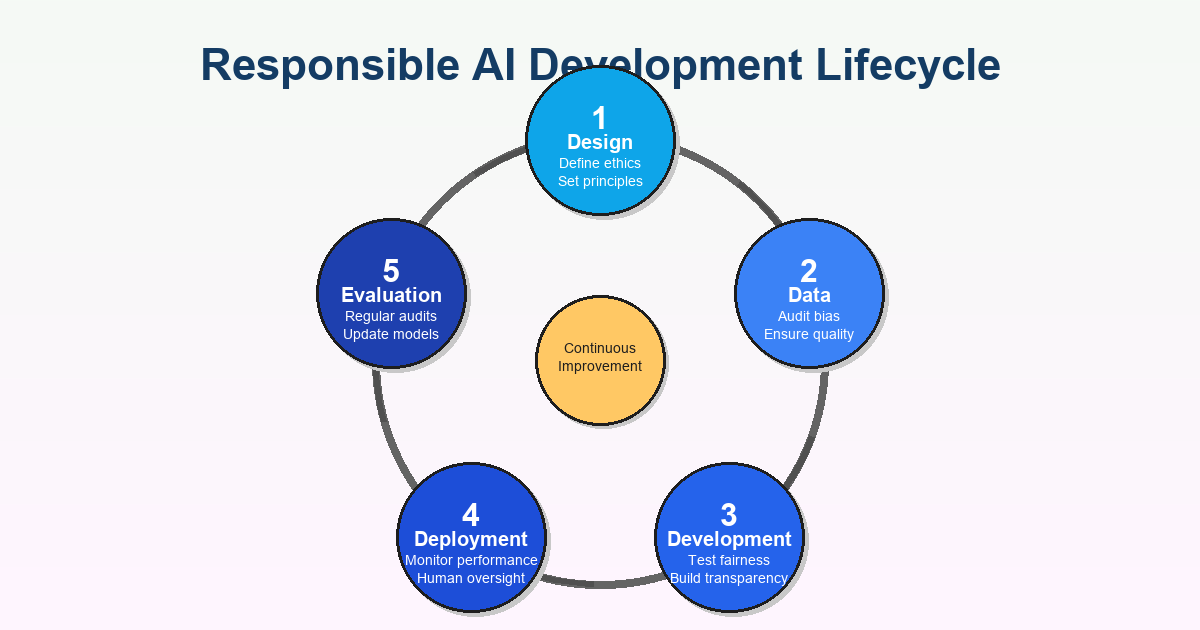

The goal isn't perfection. It's building AI that minimizes harm, respects human rights, and aligns with societal values across the entire lifecycle—from training data to post-deployment monitoring.

Why Organizations Should Care

AI systems now make decisions that affect people's lives: who gets hired, who receives loans, who gets released on bail, what medical treatments are recommended. When these systems work well, they improve efficiency and fairness. When they fail, consequences can be devastating.

Consider bias. AI learns from historical data, and if that data reflects past discrimination, the AI perpetuates those patterns. We've seen hiring algorithms favoring certain demographics, facial recognition performing poorly on darker skin tones, and credit models disadvantaging marginalized communities. These aren't technical glitches—they're ethical failures that entrench inequality.

Transparency matters equally. Many AI systems operate as black boxes, making decisions their creators can't fully explain. If an AI denies someone a job or flags them as high-risk, they deserve to understand why. Without transparency, accountability disappears and trust erodes.

Privacy and security are critical too. AI systems require vast amounts of data, often including sensitive personal information. Organizations must protect this data and be transparent about collection and usage.

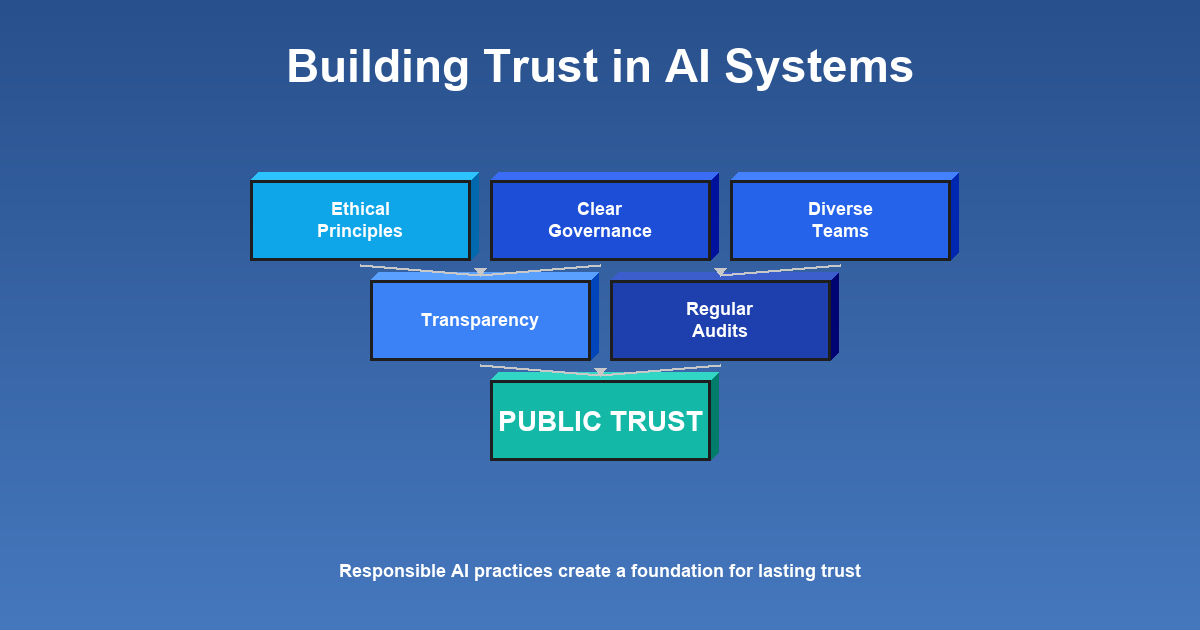

Public confidence in AI must be earned. When organizations cut corners on responsible practices, they risk their reputation and the public's willingness to accept AI broadly. Building trust requires consistent demonstration that systems serve people's interests.

The regulatory landscape is evolving rapidly. Governments worldwide are introducing AI regulations. Organizations that haven't prioritized responsible AI may scramble to comply. Proactive responsibility is both ethically sound and increasingly a legal requirement.

Building Responsible AI: A Practical Approach

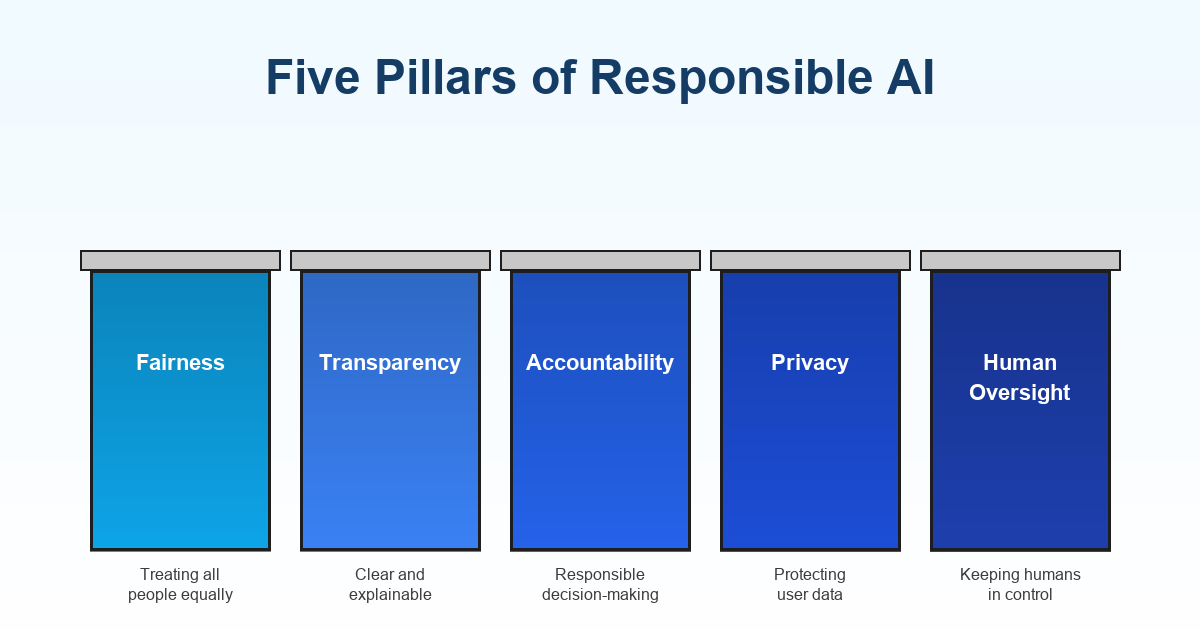

Start with clear ethical principles guiding every stage of development and deployment. These should be specific to your organization but typically include commitments to fairness, transparency, accountability, privacy, and human oversight.

Create a governance framework defining who is responsible for AI decisions, how those decisions get made, and what processes exist for review and audit. Governance shouldn't be a compliance checkbox—it needs embedding into organizational culture.

Diversity in development teams makes a real difference. Homogeneous teams have blind spots about how systems might affect different communities. Varied backgrounds and perspectives help identify problems early and design solutions that work for everyone.

Regular audits catch issues before they cause harm. Test for bias, evaluate performance across demographic groups, and monitor real-world impact over time. Testing once at launch isn't enough—ongoing monitoring is essential because AI systems can drift in production.

Prioritize transparency from day one. Be open about what an AI system does, what data it uses, and how it makes decisions. Provide explanations for AI-driven decisions, especially where they significantly affect individuals.

Invest in education ensuring everyone involved—developers, executives, end-users—understands both capabilities and limitations. Responsible AI requires buy-in at every level.

What Happens When Organizations Get It Wrong?

Poorly designed AI perpetuates and amplifies existing biases. Algorithms have discriminated against women in hiring, given harsher sentencing recommendations for people of color, and denied services to elderly populations. These aren't hypothetical risks—they're documented failures that harmed real people.

When failures become public, reputational damage can be immense. Trust, once lost, is difficult to rebuild. Customers, partners, and employees become skeptical of the organization's ethical commitment.

Legal consequences are increasingly likely. Organizations deploying biased or harmful systems may face lawsuits, regulatory penalties, and mandated changes. Regulators are taking more active roles in holding organizations accountable.

Beyond direct harms, there's a broader societal cost. Each AI failure feeds public anxiety about these technologies and makes it harder for responsible organizations to deploy AI beneficially.

Organizations neglecting responsible AI also miss opportunities to create genuine value. AI done right can address society's pressing challenges—but only when built on trust and responsibility.

Real-World Leaders in Responsible AI

Many organizations are setting examples worth following. Microsoft developed a comprehensive responsible AI governance framework with detailed guidelines and tools like fairness checklists. They've made these resources publicly available, raising the bar across the industry.

IBM established an AI Ethics Board—a dedicated group guiding AI initiatives and ensuring alignment with ethical principles. This institutional structure signals that responsible AI is a priority at the highest levels.

H&M developed its own responsible AI framework for operations from inventory management to customer service, demonstrating that responsible AI isn't just for tech giants.

Accenture implemented AI-powered hiring tools designed to reduce recruitment bias, with built-in safeguards and regular audits. This shows how responsible principles can be operationalized where stakes are high.

These examples share common themes: proactive governance, transparency, ongoing monitoring, and willingness to be held accountable.

Moving Forward

Responsible AI is a journey, not a destination. As technologies evolve, our understanding of responsibility will develop too. What matters is commitment to continuously questioning, improving, and holding ourselves accountable.

This isn't just an ethical imperative—it's strategic. Organizations building trust through responsible practices will be better positioned to innovate, attract talent, comply with regulations, and build lasting relationships.

The potential of AI is real, but realizing it requires intentionality. By embracing responsible practices, organizations help ensure that as AI becomes more powerful, it reflects our highest values.

The question isn't whether we'll have AI—we already do. The question is what kind: AI that reinforces inequities or promotes fairness; AI that operates in shadows or is transparent; AI that serves narrow interests or benefits society. These choices are ours to make.