Scanning repositories

How to scan GitHub repositories for AI and ML library usage, review detected dependencies, and assess model file security vulnerabilities.

Overview

AI Detection scans GitHub repositories to identify AI and machine learning libraries in your codebase. It helps organizations discover "shadow AI" — AI usage that may not be formally documented or approved — and supports compliance efforts by maintaining an inventory of AI technologies.

The scanner analyzes source files, dependency manifests, and model files to detect over 50 AI/ML frameworks including OpenAI, TensorFlow, PyTorch, LangChain, and more. Results are stored for audit purposes and can be reviewed at any time from the scan history.

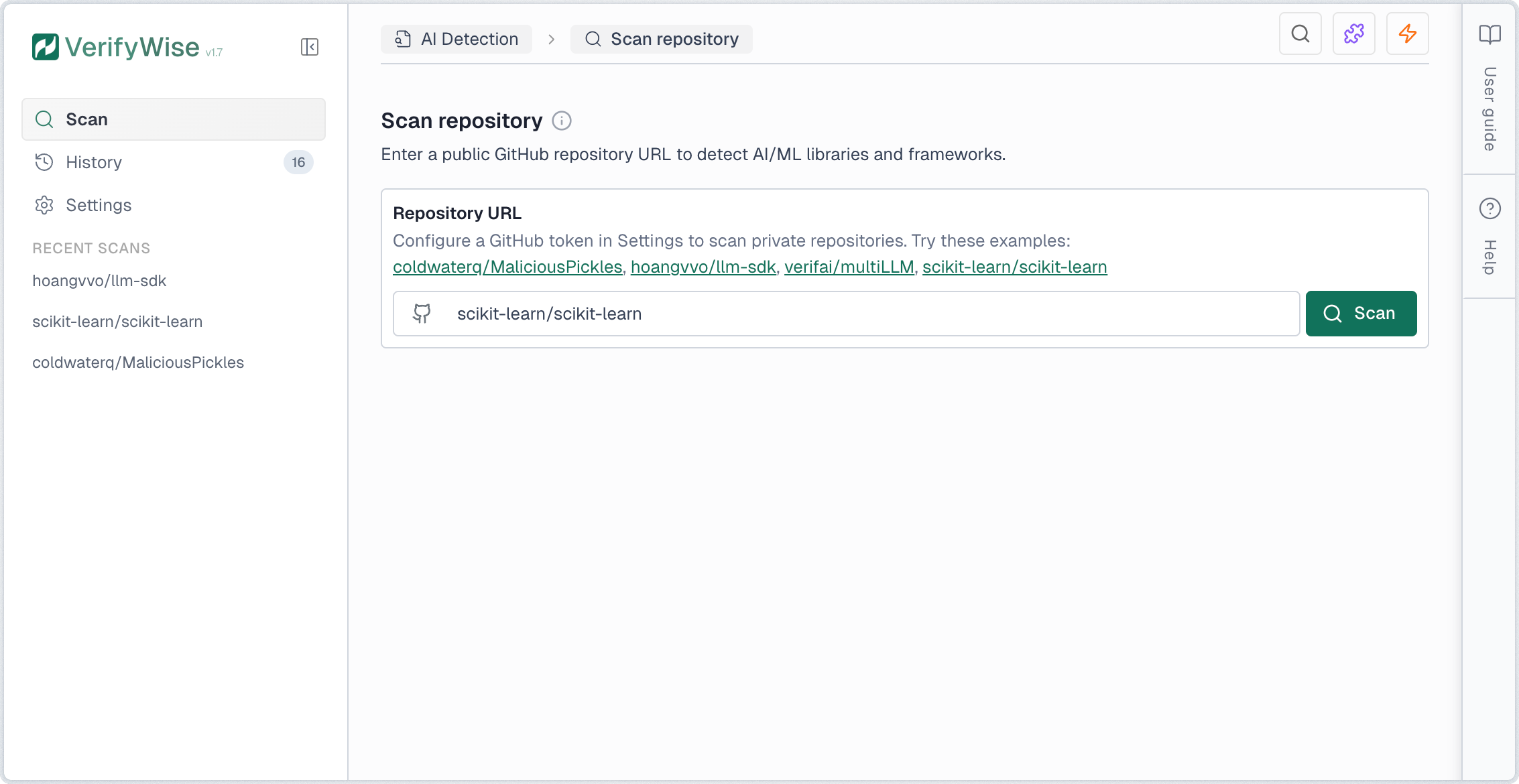

Starting a scan

To scan a repository, enter the GitHub URL in the input field. You can use either the full URL format (https://github.com/owner/repo) or the short format (owner/repo). Click Scan to begin the analysis.

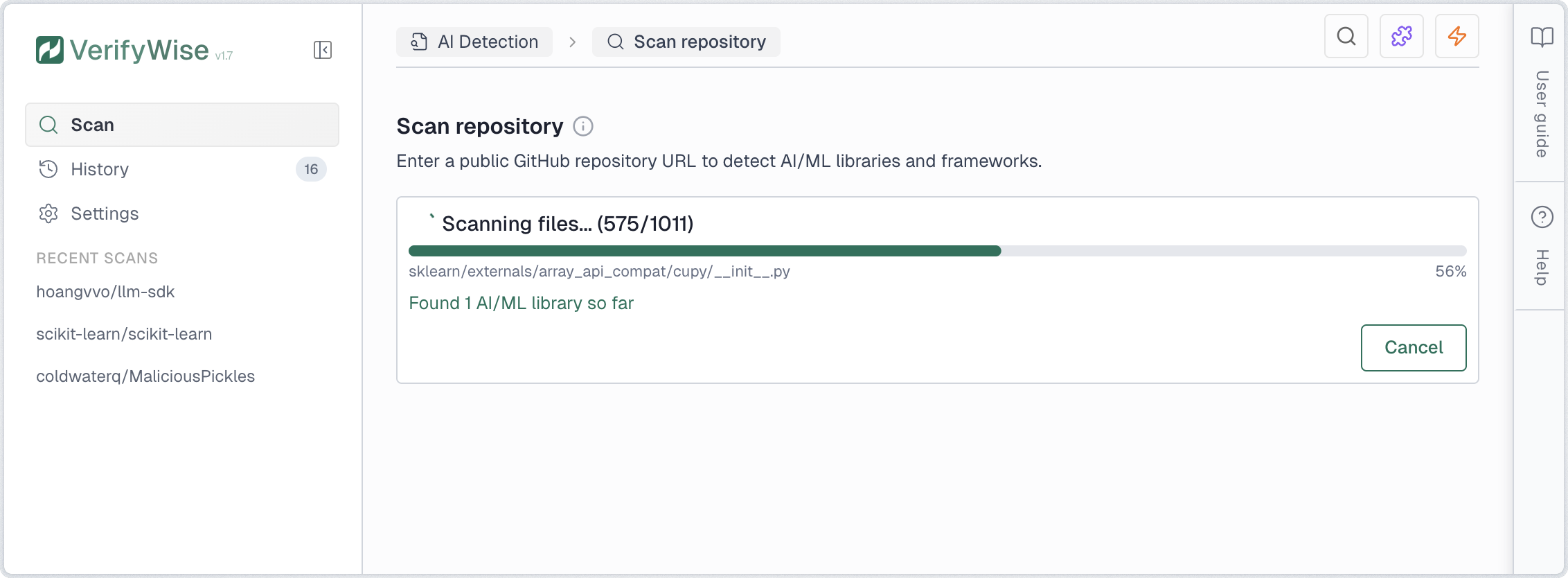

Scan progress

Once initiated, the scan proceeds through several stages. A progress indicator shows real-time status including the current file being analyzed, total files processed, and findings discovered.

- Cloning: Downloads the repository to a temporary cache

- Scanning: Analyzes files for AI/ML patterns and security issues

- Completed: Results are ready for review

You can cancel an in-progress scan at any time by clicking Cancel. The partial results are discarded and the scan is marked as cancelled in the history.

Statistics dashboard

The scan page displays key statistics about your AI Detection activity. These cards provide a quick overview of your scanning efforts and findings.

- Total scans: Number of scans performed, with a count of completed scans

- Repositories: Unique repositories that have been scanned

- Total findings: Combined count of all AI/ML detections across all scans

- Libraries: AI/ML library imports and dependencies detected

- API calls: Direct API calls to AI providers (OpenAI, Anthropic, etc.)

- Security issues: Hardcoded secrets and model file vulnerabilities combined

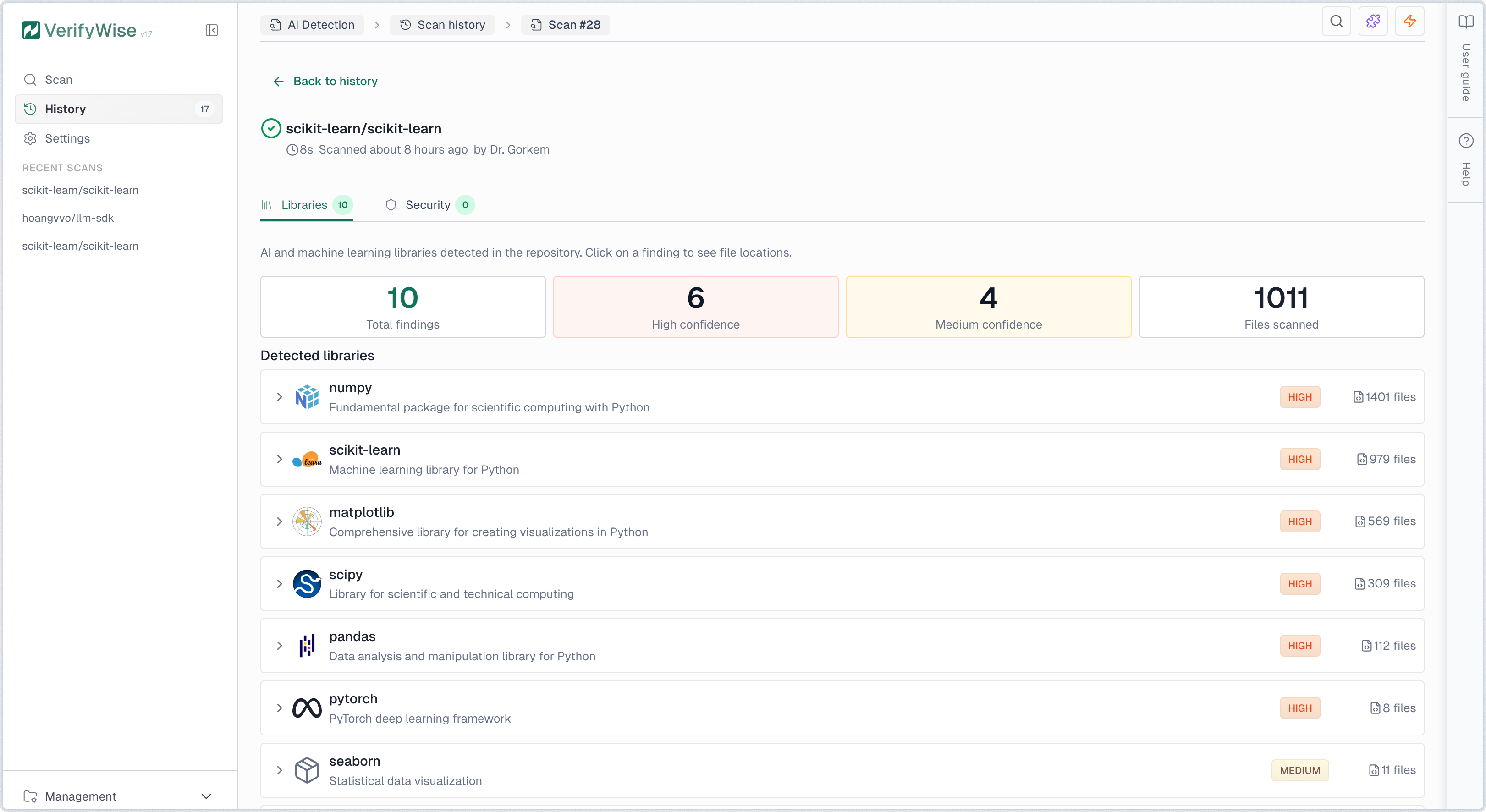

Understanding results

Scan results are organized into four tabs: Libraries for detected AI/ML frameworks, API Calls for direct provider integrations, Secrets for hardcoded credentials, and Security for model file vulnerabilities.

Libraries tab

The Libraries tab displays all detected AI/ML technologies. Each finding shows the library name, provider, risk level, confidence level, and number of files where it was found. Click any row to expand and view specific file paths and line numbers.

Risk levels indicate the potential data exposure:

- High risk: Data sent to external cloud APIs. Risk of data leakage, vendor lock-in, and compliance violations.

- Medium risk: Framework that can connect to cloud APIs depending on configuration. Review usage to assess actual risk.

- Low risk: Local processing only. Data stays on your infrastructure with minimal external exposure.

Confidence levels indicate detection certainty:

- High: Direct, unambiguous match such as explicit imports or dependency declarations

- Medium: Likely match with some ambiguity, such as generic utility imports

- Low: Possible match requiring manual verification

Governance status

Each library finding can be assigned a governance status to track review progress. Click the status icon on any finding row to set or change its status:

- Reviewed: Finding has been examined but no decision made yet

- Approved: Usage is authorized and compliant with organization policies

- Flagged: Requires attention or is not approved for use

API Calls tab

The API Calls tab shows direct integrations with AI provider APIs detected in your codebase. These represent active usage of AI models and services, such as calls to OpenAI, Anthropic, Google AI, and other providers.

API call findings include:

- REST API endpoints: Direct HTTP calls to AI provider APIs (e.g., api.openai.com)

- SDK method calls: Usage of official SDKs (e.g., openai.chat.completions.create())

- Framework integrations: LangChain, LlamaIndex, and other framework API calls

Secrets tab

The Secrets tab identifies hardcoded API keys and credentials in your codebase. These should be moved to environment variables or a secrets manager to prevent accidental exposure.

The scanner detects common AI provider API key patterns:

- OpenAI API keys: Keys starting with sk-...

- Anthropic API keys: Keys starting with sk-ant-...

- Google AI API keys: Keys starting with AIza...

- Other provider keys: AWS, Azure, Cohere, and other AI service credentials

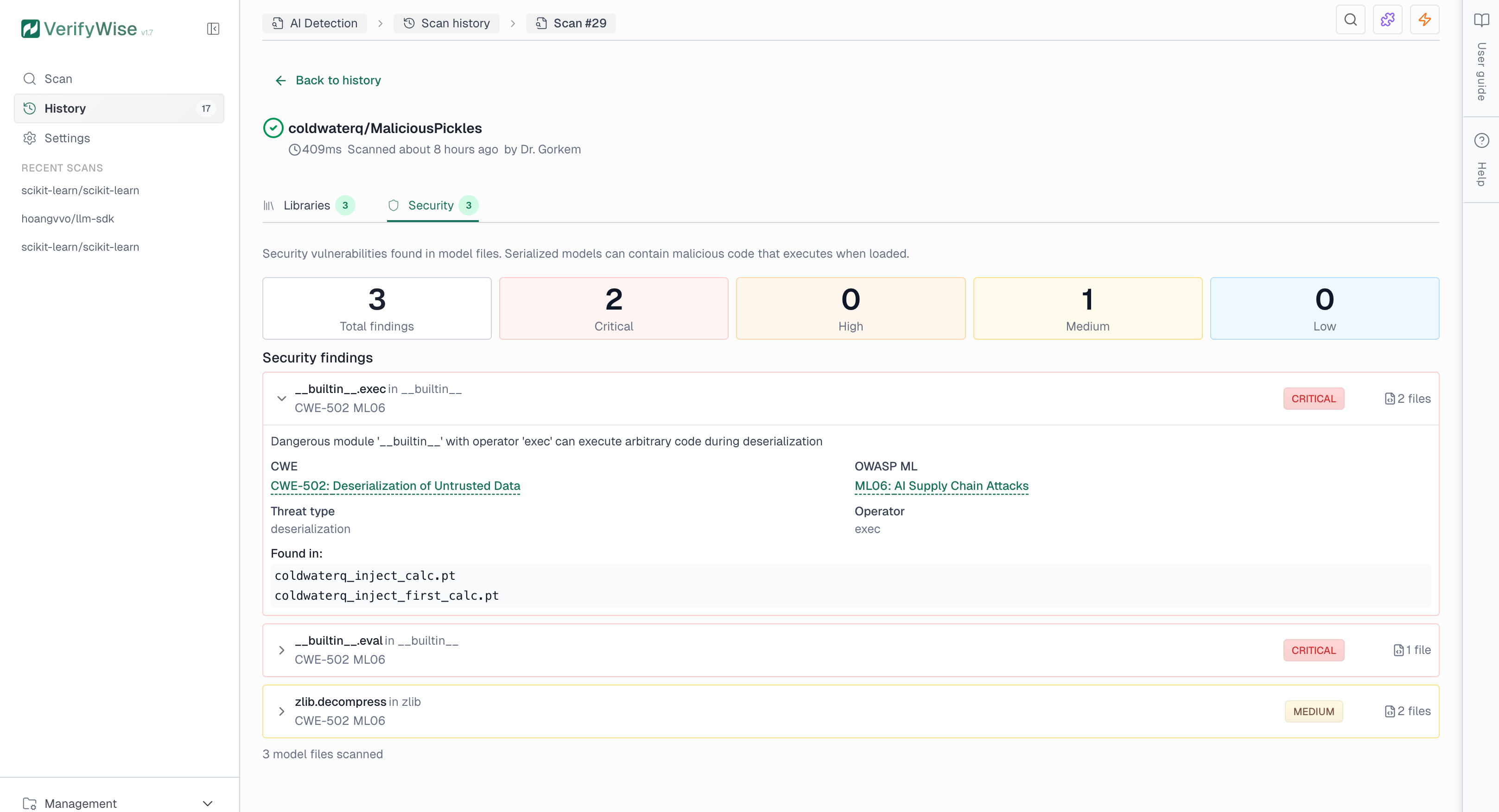

Security tab

The Security tab shows findings from model file analysis. Serialized model files (.pkl, .pt, .h5) can contain malicious code that executes when loaded. The scanner detects dangerous patterns such as system command execution, network access, and code injection.

Security findings include severity levels and compliance references:

- Critical: Direct code execution risk — immediate investigation required

- High: Indirect execution or data exfiltration risk

- Medium: Potentially dangerous depending on context

- Low: Informational or minimal risk

Compliance references

Security findings include industry-standard references to help with compliance reporting:

- CWE: Common Weakness Enumeration — industry standard for software security weaknesses (e.g., CWE-502 for deserialization vulnerabilities)

- OWASP ML Top 10: OWASP Machine Learning Security Top 10 — identifies critical security risks in ML systems (e.g., ML06 for AI Supply Chain Attacks)

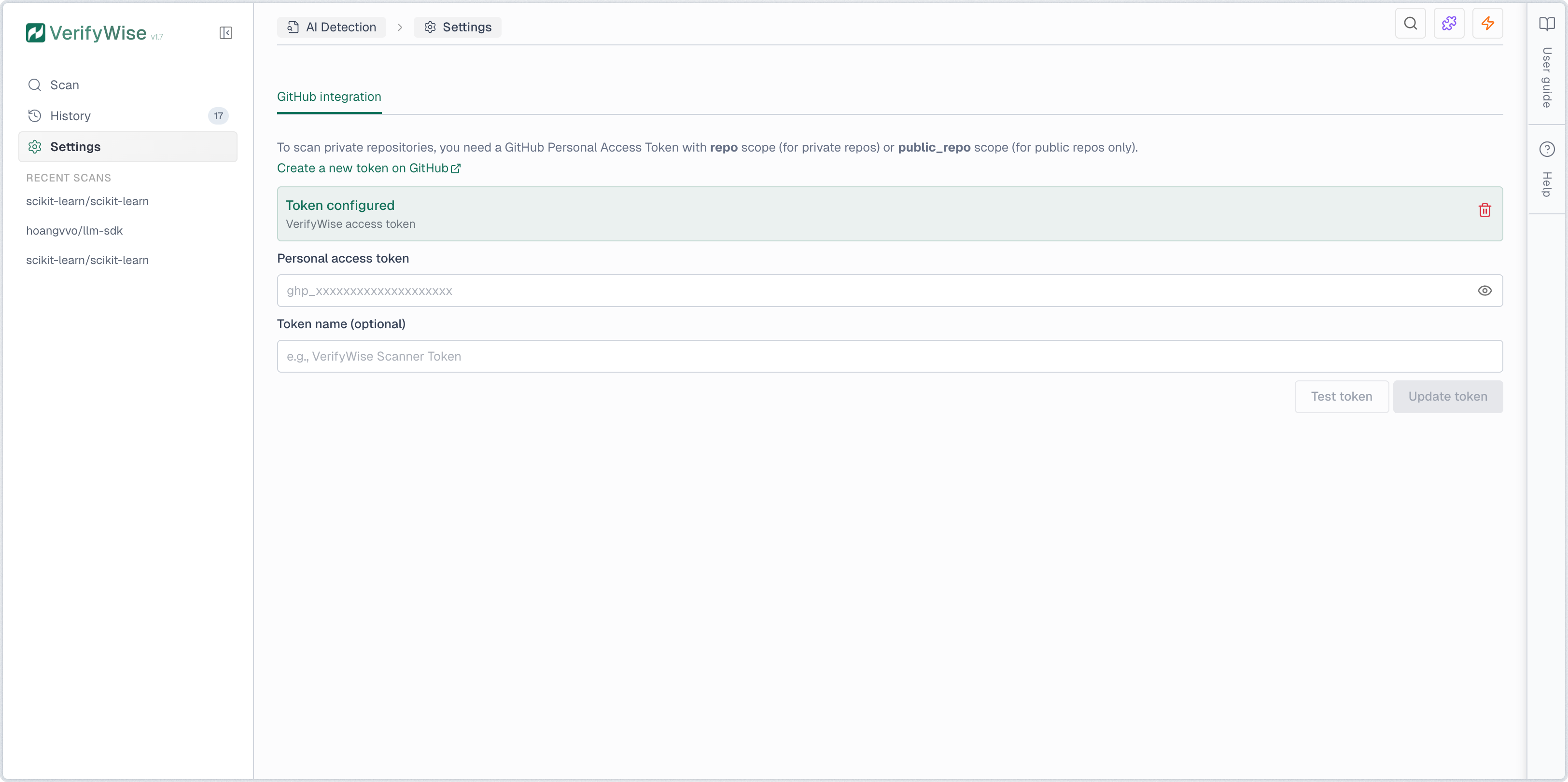

Scanning private repositories

To scan private repositories, you must configure a GitHub Personal Access Token (PAT) with the repo scope. Navigate to AI Detection → Settings to add your token. The token is encrypted at rest and used only for git clone operations.

For instructions on creating a GitHub PAT, see the official GitHub documentation at https://docs.github.com/en/authentication/keeping-your-account-and-data-secure/creating-a-personal-access-token.