Your employees are already using AI. The question is whether you know about it.

A developer pastes proprietary source code into ChatGPT. A marketing analyst uploads customer data to an image generation tool. A finance team member routes sensitive queries through an unapproved LLM endpoint. None of these interactions appear in any approved software register.

This is shadow AI: the use of AI tools within an organization without IT, security, or compliance approval. It shows up not in software inventories but in network logs. And the gap between adoption and governance keeps widening. By the time an organization discovers an unapproved tool, sensitive data may have already been sent to a provider with unknown retention and training policies.

The consequences are concrete. Data sent to AI providers may be retained, used for training, or exposed through breaches. This creates compliance exposure under GDPR, HIPAA, SOC 2, and sector-specific rules that require data processing controls. Vendor relationships go untracked: no data processing agreements, no security assessments, no contractual protections.

And when a regulator asks which AI tools the organization uses, who uses them, and what data they process, most organizations cannot answer.

Why traditional security tools fall short

Firewalls can block domains but cannot categorize traffic as AI-related or assess an AI vendor's risk profile. DLP systems detect patterns in outbound data but don't maintain a registry of AI tools. SIEM platforms collect the raw logs that contain evidence of AI usage but lack the detection logic to find and classify it.

None of these tools were built to answer the question "which AI tools are people actually using?" That requires something purpose-built.

How VerifyWise Shadow AI detection works

Shadow AI detection in VerifyWise is passive. No agents on endpoints, no browser extensions. It ingests log data that organizations already collect from their security infrastructure.

Log ingestion

Two ingestion methods are available. A REST API accepts JSON-formatted events and works with SIEM systems, custom log pipelines, and orchestration tools. A syslog receiver on TCP port 5514 accepts standard syslog messages from web proxies, firewalls, and network appliances.

Parsing and normalization

Raw logs arrive in different formats depending on the source. Four built-in parsers handle the most common enterprise sources: Zscaler Internet Access, Netskope Cloud Security, Squid proxy, and a generic key-value parser for systems that output structured key=value pairs. Each parser extracts timestamp, source IP, username, destination domain, URL path, HTTP method, bytes transferred, and action taken, then normalizes them into a common event schema.

AI tool identification

Normalized events are matched against a curated registry of over 50 AI tools and services organized by category: LLM chatbots (ChatGPT, Claude, Gemini, Perplexity), coding assistants (GitHub Copilot, Cursor, Tabnine), image generators (Midjourney, DALL-E, Stable Diffusion), writing tools (Notion AI, Jasper, Grammarly), and low-code AI platforms (v0.dev, Bolt, Replit). Each registry entry includes the tool name, provider, associated domains, default risk classification, and category metadata.

Detection goes beyond domain matching. Regex patterns applied to URI paths extract specific model identifiers from API traffic. When a request hits the AWS Bedrock endpoint with a path containing anthropic.claude-3-opus, or when traffic to Azure OpenAI references gpt-4-turbo in the deployment path, the system captures the specific model being invoked. This model-level extraction covers AWS Bedrock, Azure OpenAI, Google Vertex AI, and direct API endpoints for major providers.

Event enrichment

Every detected event is enriched with organizational context. The username is correlated with department, job title, and reporting manager from the SIEM data. A finance analyst accessing an unapproved AI tool carries different risk than a developer using the same tool, and the scoring model needs that distinction.

Risk scoring: four weighted factors

Each detected AI tool gets a composite risk score from 0 to 100, computed from four weighted factors.

Approval status (40%) is the largest factor. An unapproved tool with no governance record gets the maximum penalty. A formally reviewed and approved tool gets the minimum. Tools under active review fall in between.

Data policy compliance (25%) evaluates the AI vendor's security posture. Does the vendor hold SOC 2 Type II? Is the service GDPR-compliant with a published data processing agreement? Does the vendor encrypt data at rest and in transit? Does it use customer data for model training? Vendors with opt-out training policies or no published retention policy score higher risk.

Department sensitivity (20%) weights usage from Finance, Legal, HR, and Executive functions higher than Marketing, Sales, or Engineering. These departments handle regulated data (financial records, legal privilege, employee PII, strategic plans) where exposure to an unvetted AI tool has disproportionate consequences.

Usage volume (15%) normalizes raw event counts against organizational averages to find outliers. A tool generating 10x the average traffic volume is elevated risk regardless of approval status, because high volume implies deeper workflow integration and greater data exposure.

Risk scores are not static. They recalculate as new events arrive, usage patterns shift, and tool status progresses through governance.

Alerting through a configurable rules engine

The alerting system has six configurable trigger types:

- New AI tool detected - fires when the system sees a tool it has never encountered in the organization's traffic

- Usage threshold exceeded - fires when a tool's event count surpasses a configurable limit within a time period

- Sensitive department access - fires when users from high-sensitivity departments access a tool or tool category

- Blocked access attempts - fires when logs show a proxy or firewall blocked access to an AI tool, indicating intent even when access was denied

- Risk score exceeded - fires when a tool's composite risk score crosses a configurable boundary

- New user detected - fires when a previously unseen user begins accessing a known AI tool

Each rule can trigger one or more actions: send alert notifications, create governance tasks with owners and deadlines, start a governance review, or create risk entries. Cooldown periods prevent alert fatigue by suppressing repeat notifications for a configurable interval. Recipient lists are set per rule, so a new-tool alert reaches the security team while a sensitive-department alert goes to the compliance officer.

From detection to governance in four steps

Most organizations that tackle shadow AI stop at detection. They identify which tools are in use, produce a report, and leave it to manual processes to decide what happens next. The governance wizard in VerifyWise converts a detected tool into a managed, trackable asset through four steps.

Step 1: Add to Model Inventory. The detected tool goes into the organization's Model Inventory. Fields are pre-filled from detection data (tool name, provider, primary domain, category, initial risk score). The governance owner reviews and fills in the rest.

Step 2: Assign governance ownership. An owner, reviewer, and due date are assigned. The owner drives the evaluation, the reviewer provides secondary oversight, and the due date creates accountability.

Step 3: Risk assessment. A structured assessment captures data sensitivity classification (what data types are exposed), user count (how many people use it), and department exposure (which functions are affected). This feeds into the VerifyWise risk register.

Step 4: Initiate model lifecycle (optional). The tool can be enrolled in a full lifecycle process: vendor security review, legal review of terms of service, and data processing agreement negotiation.

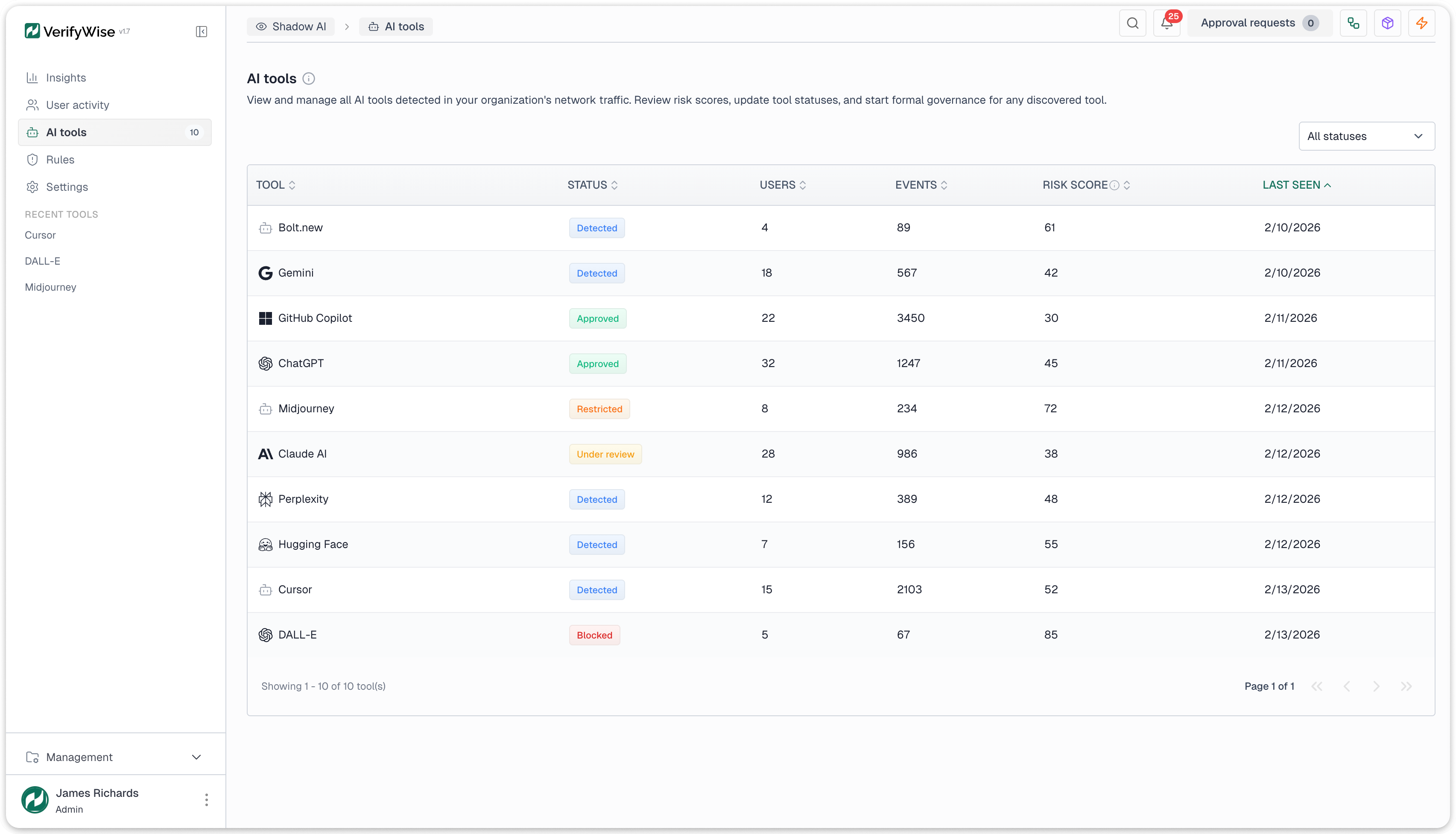

As a tool moves through governance, its status progresses: Detected (no action taken), Under review (governance started), then one of four terminal states: Approved (sanctioned), Restricted (permitted with conditions), Blocked (prohibited), or Dismissed (irrelevant or false positive).

The result is an auditable chain: unknown tool in network traffic, governance review with an assigned owner, then a formal approval decision with documentation. That's what regulators and auditors actually look for.

Analytics and reporting

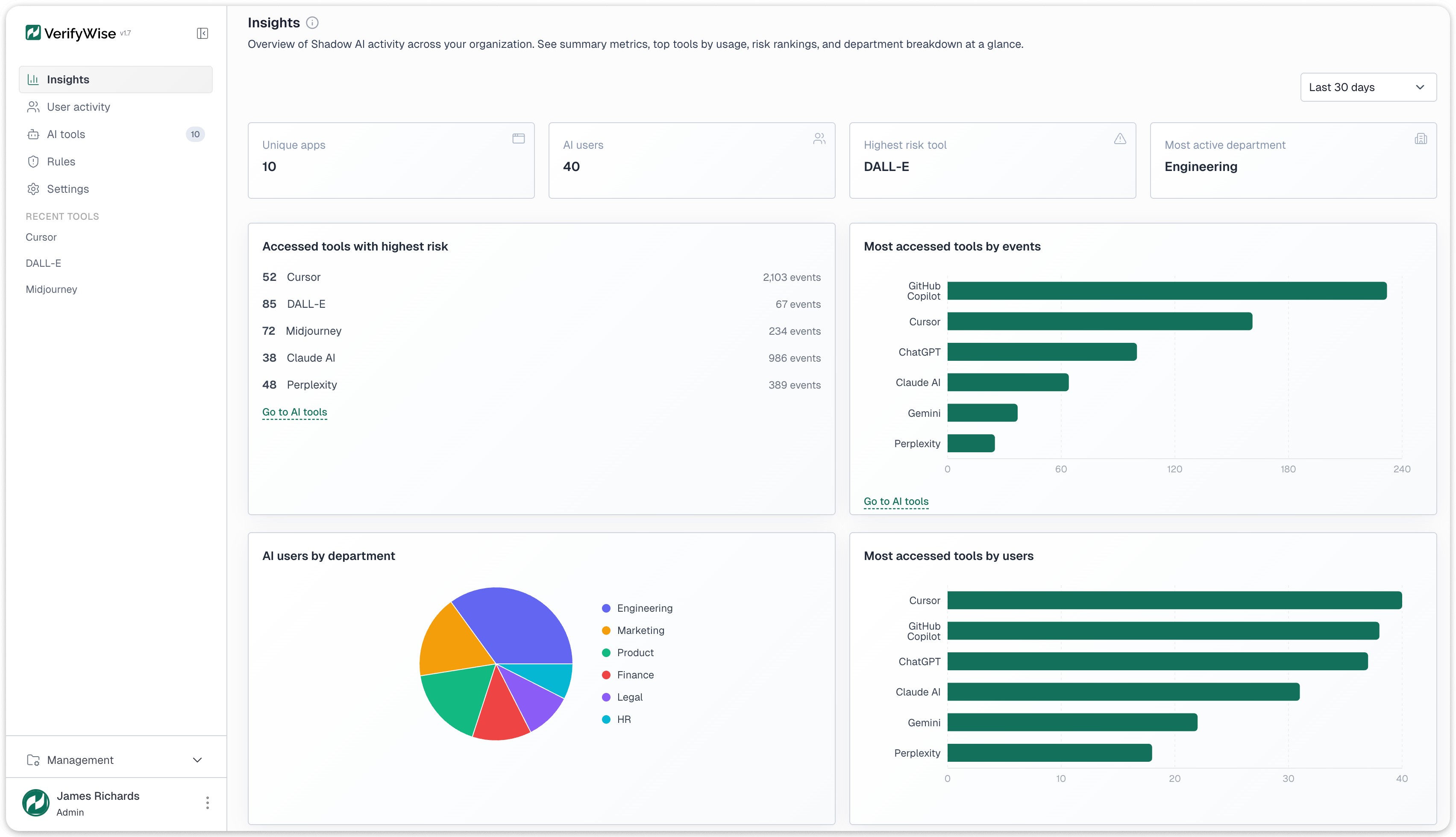

The insights dashboard opens with four KPI cards: unique AI applications discovered, total AI users across all tools, the highest-risk tool currently detected, and the most active department by event volume. These give an executive-level snapshot before any drill-down.

Four chart types provide deeper views. A tools-by-event-volume bar chart ranks detected tools by total traffic, showing which tools have the largest data exposure surface. A tools-by-unique-users bar chart ranks by distinct user count, revealing which tools have the widest adoption. A users-by-department pie chart shows where AI usage concentrates. A trend analysis line chart tracks total events, unique users, and new tool discoveries over configurable ranges from 30 days to one year.

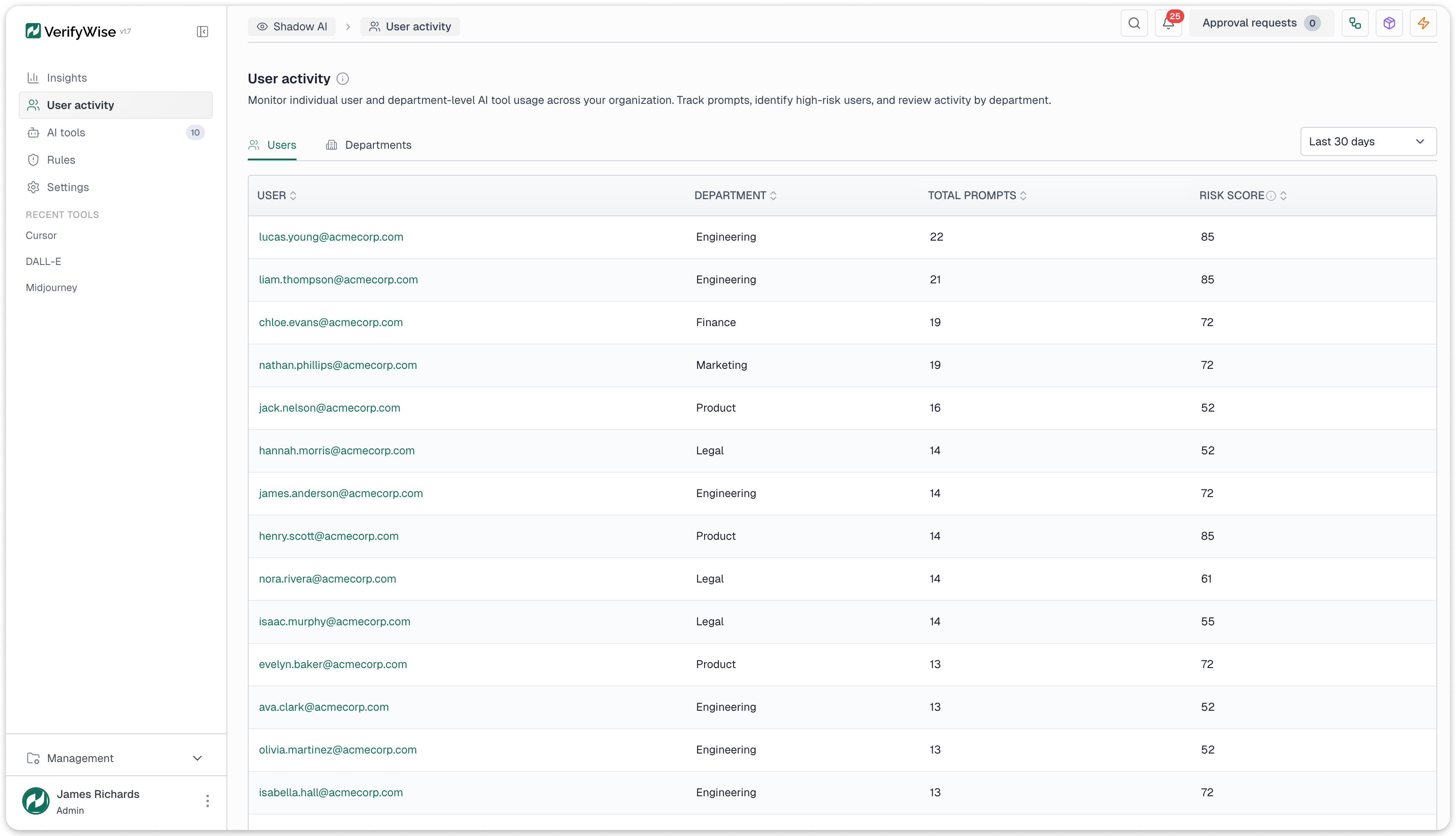

User activity data is available at two levels. At the individual level: each user's event counts, tools accessed, risk scores, and department. At the department level: aggregate usage, top tools, and maximum risk exposure, suitable for reporting to department heads and compliance committees.

Data retention

A three-tier retention model balances depth with storage:

- Raw events are kept for 30 days with full detail for forensic investigation and drill-down

- Daily rollups are kept for one year, providing aggregated summaries for trend analysis and quarterly reporting

- Monthly rollups are kept permanently for year-over-year comparisons and regulatory inquiries that span multiple years

All data is searchable, sortable, and filterable. Exports are available for compliance workflows that require data extraction for auditors or regulatory submissions.

What Shadow AI detection can and cannot do

It's important to be clear about scope. Shadow AI detection operates on network metadata. Here is what it covers and where it has limits.

What it does:

- Discovers cloud and SaaS AI tool usage from network logs with no endpoint agents or browser extensions

- Identifies over 50 AI tools across five categories and extracts specific model names from API traffic across AWS Bedrock, Azure OpenAI, Google Vertex AI, and direct provider endpoints

- Scores risk on a 0-to-100 scale using four weighted factors: approval status, vendor compliance posture, usage volume, and department sensitivity

- Alerts teams through 6 trigger types with 4 action types (notifications, tasks, governance reviews, risk entries)

- Converts detected tools into governed assets through a four-step wizard that creates Model Inventory entries, assigns owners, and starts risk assessments

- Parses 4 log formats out of the box (Zscaler, Netskope, Squid, generic key-value)

What it does not do:

- Inspect encrypted HTTPS content. It operates on metadata: domains, URL paths, timestamps, user identifiers. Extracting this from encrypted traffic requires a TLS-intercepting proxy or API gateway upstream

- Detect locally hosted AI models (Ollama, local Llama instances, private inference servers). These don't generate external network traffic

- Capture actual prompts, responses, or conversations. It works on access metadata only: who accessed what, when, from which department, how often

- Block traffic. It is advisory only. Blocking access requires configuration at the proxy, firewall, or DNS layer

- Run behavioral anomaly detection. Alerting is rule-based with explicit thresholds, not ML-driven pattern analysis

Getting started

Shadow AI detection is one module in the VerifyWise AI governance platform, alongside model inventory, risk management, compliance framework tracking, and LLM evaluations. If your organization already collects web proxy or firewall logs, you have the data. The question is whether you're using it to find AI tools before they become compliance incidents.

See the Shadow AI Detection page for a walkthrough of the full module.

Book a demo to see how it works with your existing log infrastructure, or explore the full VerifyWise platform to see how detection connects to governance.